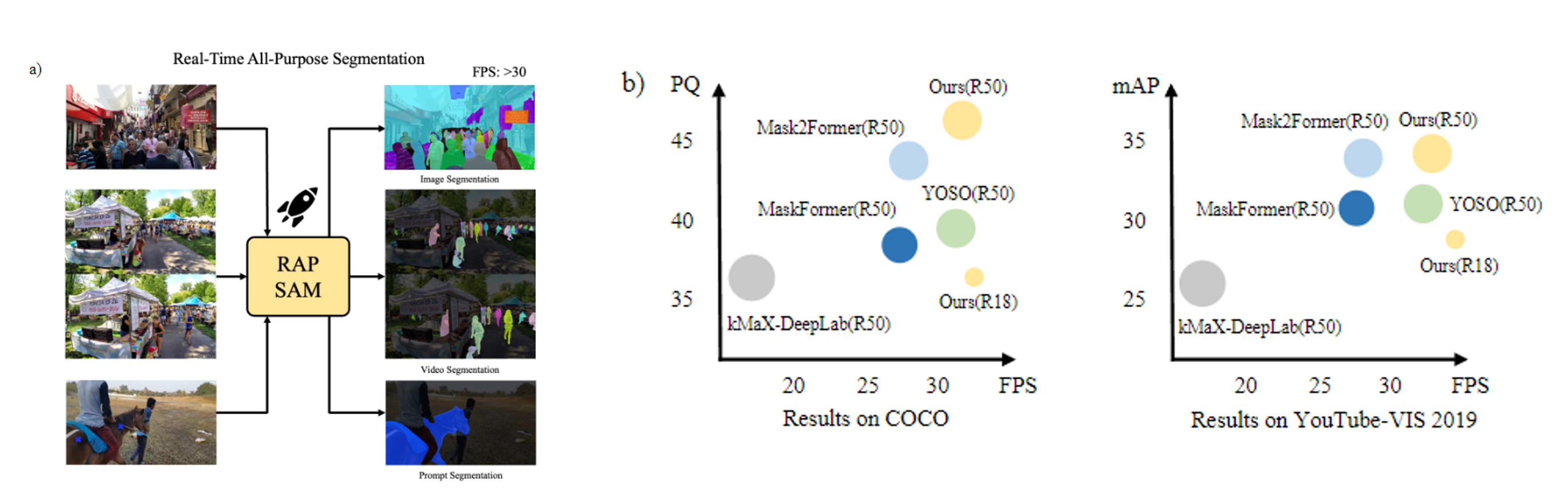

We present real-time all-purpose segmentation to segment and recognize objects for image, video, and interactive inputs. In addition to benchmarking, we also propose a simple yet effective baseline, named RAP-SAM, which achieves the best accuracy and speed trade-off among three different tasks. Both real time panoptic segmentation and video instance segmentation are shown at the right.

| Methods | SS | PS | VIS | Interactive | Multi-Task in One Model | Real Time |

|---|---|---|---|---|---|---|

| ICNet | ✔ | ✘ | ✘ | ✘ | ✘ | ✔ |

| Bi-Seg | ✔ | ✘ | ✘ | ✘ | ✘ | ✔ |

| YOSO | ✔ | ✔ | ✘ | ✘ | ✘ | ✔ |

| Mobile-VIS | ✘ | ✘ | ✔ | ✘ | ✘ | ✔ |

| SAM | ✘ | ✘ | ✘ | ✔ | ✘ | ✘ |

| Mask2Former | ✔ | ✔ | ✘ | ✘ | ✘ | ✘ |

| Video K-Net | ✘ | ✔ | ✔ | ✘ | ✘ | ✘ |

| OneFormer | ✔ | ✔ | ✘ | ✘ | ✔ | ✘ |

| RAP-SAM(Ours) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |